Meta analyses are not a 'ready-to-eat' dish that necessarily satisfy our desire for 'knowledge' - they require as much inspection as any primary data paper and indeed, afford closer inspection...as we have access to all of the data. Since the turn of the year, 5 meta-analyses have examined Cognitive Behavioural Therapy (CBT) for schizophrenia and psychosis. The new year started with the publication of our meta analysis (Jauhar et al 2014) and it has received some comment on the BJP website, which I wholly encourage; however the 4 further meta-analyses in 4 last months have received little or no commentary...so, I will briefly offer my own.

Slow Motion (Ultravox)

1) Turner, van der Gaag, Karyotaki & Cuijpers (2014) Psychological Interventions for Psychosis: A Meta-Analysis of Comparative Outcome Studies

Turner et al assessed 48 Randomised Controlled Trials (RCTs) involving 6 psychological interventions for psychosis (e.g. befriending, supportive counselling, cognitive remediation); and found CBT was significantly more efficacious than other interventions (pooled together) in reducing positive symptoms and overall symptoms (g= 0.16 [95%CI 0.04 to 0.28 for both]), but not for negative symptoms (g= 0.04 [95%CI -.09 to 0.16]) of psychosis

The one small effect described by Turner et al as robust - for positive symptoms - however became nonsignificant when researcher allegiance was assessed. Turner et al rated each study for allegiance bias along several dimensions, and essentially CBT only reduced symptoms when researchers had a clear allegiance bias in favour of CBT - and this bias occurred in over 75% of CBT studies.

Comments:

One included study (Barretto et al) did not meet Turner et als own inclusion criteria of random assignment. Barretto et al state "The main limitations of this study are ...this trial was not truly randomized" (p.867). Rather, patients were consecutively assigned to groups and differed on baseline background variables such as age of onset being 5 years earlier in controls than the CBT group (18 vs 23). Crucially, some effect sizes in the Barretto study were large (approx. 1.00 for PANNS total and for BPRS). Being non-random, it should be excluded and with 95% Confidence Intervals hovering so close to zero, this makes an big difference - I shall return to this Barretto study again below

Translucence (Harold Budd & John Foxx)

2) Burns, Erickson & Brenner (2014) Cognitive Behavioural Therapy for medication-resistant psychosis: a meta analytic review

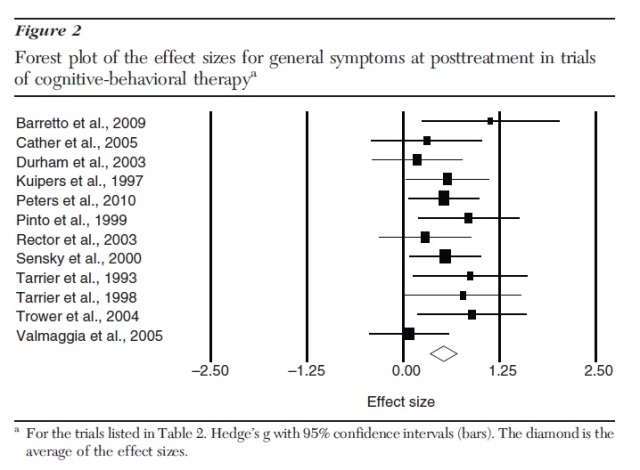

Burns et al examined CBT’s effectiveness in outpatients with medication-resistant psychosis, both at treatment completion and at follow-up. They located 16 published articles describing 12 RCTs. Significant effects of CBT were found at post-treatment for positive symptoms (Hedges’ g=.47 [95%CI 0.27 to 0.67]) and for general symptoms (Hedges’ g=.52 [95%CI 0.35 to 0.70]). These effects were maintained at follow-up for both positive and general symptoms (Hedges’ g=.41 [95%CI 0.20 to 0.61] and .40 [95%CI 0.20 to 0.60], respectively).

Comment

Wait a moment.... what effect size is being calculated here? Unlike all other CBT for psychosis meta analyses, these authors attempt to assess pre-postest change rather than the usual end-point differences between groups. Crucially - though not stated in the paper - the change effect size was calculated by subtracting the baseline and endpoint symptom means and then dividing by ...the pooled *endpoint* standard deviation (and not, as we might expect, the pooled 'change SD'). It is difficult to know what such a metric means, but the effect sizes reported by Burns et al clearly cannot be referenced to any other meta-analyses or the usual metrics of small, medium and large effects (pace Cohen).

This meta analysis also included the non-random Barretto et al trial, which again is contrary to the inclusion criteria for this meta analysis; and crucially, Barretto produced - by far - the largest effect size for general psychotic symptoms in this unusual analysis (See forest plot below).

\

van der Gaag et al examined end-of-treatment effects of individually tailored case-formulation CBT on delusions and auditory hallucinations. They examined 18 studies with symptom specific outcome measures. Statistically significant effect-sizes were 0.36 for delusions and 0.44 for hallucinations. When compared to active treatment, CBT for delusions lost statistical significance (0.33), though CBT for hallucinations remained significant(0.49). Blinded studies reduced the effect-size in delusions by almost a third (0.24) but unexpectedly had no impact on effect size for hallucinations (0.46).

Comment

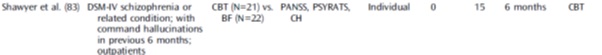

van der Gaag et al state they excluded studies that "...were not CBTp but other interventions (Chadwick et al., 2009; Shawyer et al., 2012; van der Gaag et al., 2012). Shawyer et al is an interesting example as Shawyer and colleagues recognize it as CBT, stating “The purpose of this trial was to evaluate...CBT augmented with acceptance-based strategies" The study also met the criterion of being individual and formulation based.

More importantly, clear inconsistency emerges as Shawyer et al was counted as CBT in two other 2014 meta analysis where van der Gaag is one of the authors. One is the Turner et al meta analysis (described above) where they even classified it as having CBT allegiance bias - see below far right classification in Turner et al)

And ....Shawyer et al is further included in a 3rd meta-analysis of CBT for negative symptoms by Velthorst et al (described below), where both van der Gaag & Smit are 2 of the 3 co-authors.

So, some of the same authors considered a study to be CBT in two meta-analyses, but not in a third. Interestingly, the exclusion of Shawyer et al is important because they showed that befriending significantly outperformed CBT in its impact on hallucinations. The effect sizes reported by Shawyer et al themselves at end of treatment for blind assessment (PSYRATS) gives advantages of befriending over CBT to the tune of 0.37 and 0.52; and also for distress for command hallucinations at 0.40

While the exclusion of Shawyer et al seems inexplicable, inclusion of Leff et al (2013) as an example of CBT is highly questionable. Leff et al refers to the recent 'Avatar therapy' study and at no place does it even mention CBT. Indeed, in referring to Avatar therapy, Leff himself states that he "jettisoned some strategies borrowed from Cognitive Behaviour Therapy, and developed some new ones"

And Finally...the endpoint CBT advantage of 0.47 for hallucinations in the recent unmedicated psychosis study by Morrison et al (2014) overlooks the fact that precisely this magnitude of CBT advantage existed at baseline i.e. before the trial began...and so, does not represent any CBT group improvement, but a pre-existing group difference in favour of CBT!

Removing the large effect size of .99 for Leff and the inclusion of Shawyer et al with a negative effect size of over .5 would clearly alter the picture, as would recognition that the patients receiving CBT in Morrison et al showed no change compared to controls. It would be surprising if the effect then remained significant...

Hiroshima Mon Amour (Ultravox)

4. Velthorst, Koeter, van der Gaag, Nieman, Fett, Smit, Starling Meijer C & de Haan (2014) Adapted cognitive–behavioural therapy required for targeting negative symptoms in schizophrenia: meta-analysis and meta-regression

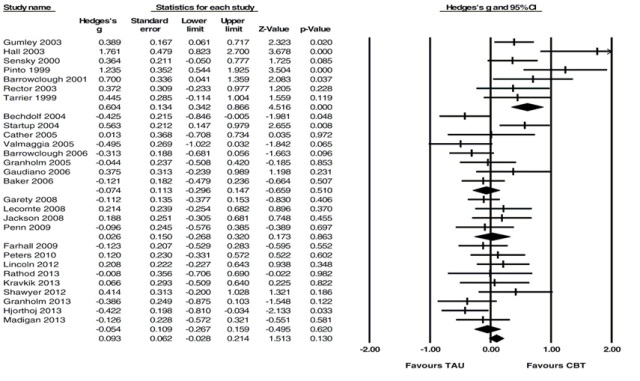

Velthorst and colleagues located 35 publications covering 30 trials. Their results showed the effect of CBT to be nonsignificant in alleviating negative symptoms as a secondary [Hedges’ g = 0.093, 95% confidence interval (CI) −0.028 to 0.214, p = 0.130] or primary outcomes (Hedges’ g = 0.157, 95% CI −0.10 to 0.409, p = 0.225). Meta-regression revealed that stronger treatment effects were associated with earlier year of publication, lower study quality.

Comment

Aside from the lack of significant effect, the main findings of this study were that the large effect size of early studies has massively shrunken and reflects the increasing quality of later studies e.g. more blind assessments.

Finally, as Velthorst et al note, the presence of adverse effects of CBT - this is most clearly visible if we look at the forest plot below - where 13 of last 21 studies (62%) show a greater reduction of negative symptoms in the Treatment as Usual group!